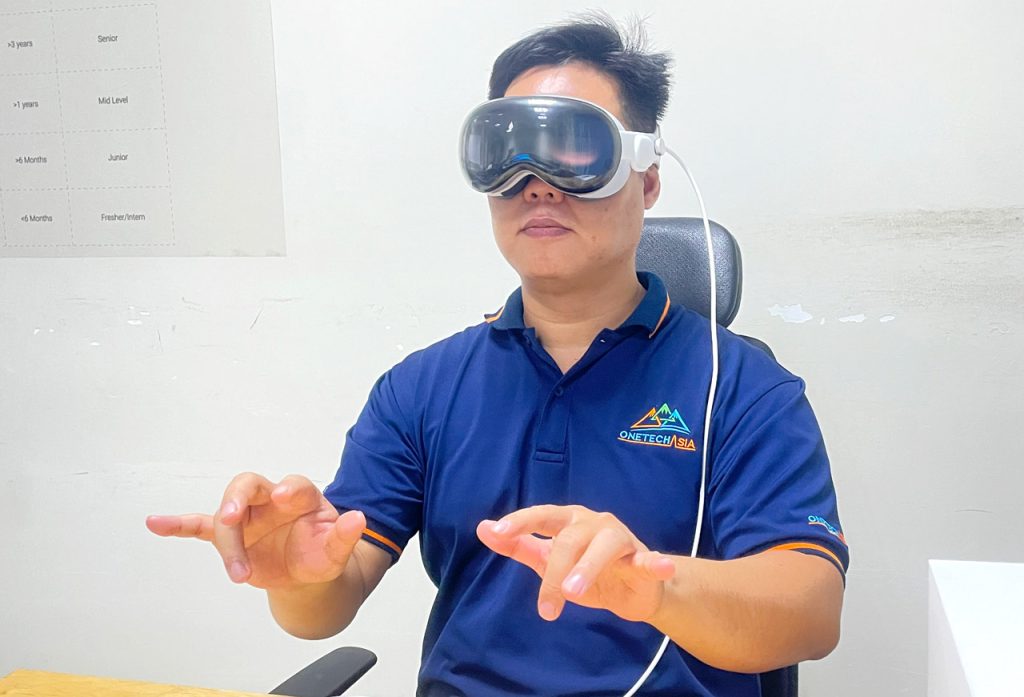

The world of augmented reality (AR) and virtual reality (VR) is becoming more accessible than ever, and the Apple Vision Pro marks a significant leap forward in this field. With this AR/VR headset, Apple has opened up new horizons for developers, enabling them to create immersive and interactive experiences like never before. So, what do you need to start developing apps for Vision Pro?

Introducing VisionOS

What is Apple Vision Pro? Launched in February 2024, the Apple Vision Pro is Apple’s first spatial computer (VR/AR) device, promising to deliver entirely new user experiences. The headset boasts a modern design, lightweight construction, and advanced features such as a high-resolution Retina display, a powerful M2 chip, 3D cameras, and a unique eye and hand tracking system.

What is VisionOS? VisionOS is the operating system specifically designed for the Apple Vision Pro, providing a robust platform for developing immersive apps and games for spatial computing. Spatial computing, as defined by Apple, is a term encompassing both virtual and augmented reality experiences.

This operating system is designed with two primary features: augmented reality (AR) and virtual reality (VR). It’s the heart of the Apple Vision Pro, providing unique features, apps, and functionalities for users. At the architectural level, VisionOS shares common core blocks with macOS and iOS but adds a real-time subsystem to handle interactive visuals on the Apple Vision Pro.

Key Features of VisionOS

- Leveraging Hardware: VisionOS fully utilizes the Apple Vision Pro’s hardware, equipped with a unique dual-chip design. The M2 chip provides powerful independent performance, while the R1 chip handles input from 12 cameras, 5 sensors, and 6 microphones.

- Ray-tracing: Delivers the most accurate 3D audio possible from augmented reality scenes.

- Digital Crown: Automatically checks for display adjustments when you first wear the Apple Vision Pro.

- Personas: Scans your face to create a real-time personalized avatar.

- Spatial: VisionOS integrates trigonometric functions for spatial angles, spherical linear interpolation, and twist-rotation decomposition for spatial rotation.

- EyeSight: Tracks and displays the wearer’s eyes to the outside world, allowing others to perceive your state and interact more naturally.

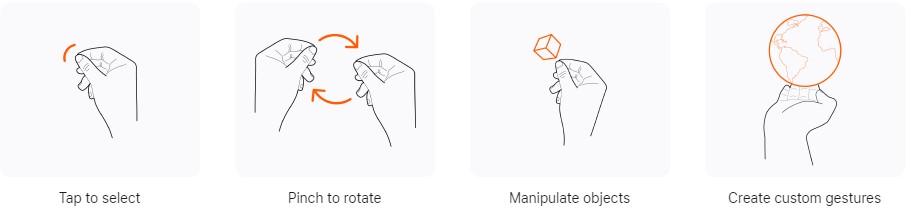

- Natural Interaction: Users can interact with apps intuitively and naturally through eye movements, hand gestures, and voice.

- 3D FaceTime: Vision Pro uses sensors to scan and create an animated face that can move in real-time, making FaceTime calls more realistic and intimate.

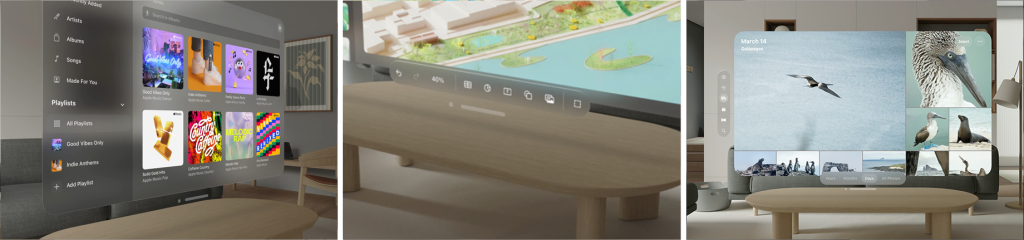

- Blending Real and Virtual Worlds: Vision Pro apps can combine virtual VR content with the real-world environment, creating immersive and realistic experiences. Vision Pro can seamlessly display both VR and AR modes.

- 3D Media: Record and playback 3D images and videos.

- Multitasking: Multitask across multiple AR windows with 3D tools.

- Workspace: Integrates Microsoft apps like Excel, Word, Teams, and conferencing tools like Zoom and Webex for increased productivity.

- Entertainment: Integrates over 100 Apple Arcade games, content, and Disney+ experiences.

- Synchronization: Synchronizes with MacBook and iPhone anywhere.

- Supports all iOS and iPadOS apps.

- Security: The Apple Vision Pro uses retinal scanning with Optic ID for enhanced security.

Developing VisionOS Apps

Hardware:

VisionOS development is currently supported only on Mac computers with Apple chips. Therefore, while you can simulate the macOS operating system, to accurately test features supported specifically on the Apple chip, you’ll need Apple-developed or supported computers.

Development Environment:

- Xcode: Xcode 15 allows you to develop, test, and distribute apps for all Apple platforms, including VisionOS development. You’ll need Xcode 15.2 or later, and your Mac must have an Apple Silicon chip.

- RealityKit and Reality Composer Pro with Diorama and Swift Playgrounds: These tools help you easily create and edit 3D content.

- Unity Pro: If you’re familiar with Unity, you can also use this tool to develop VisionOS apps. Unity PolySpatial is a collection of technologies aimed at addressing multitasking and interaction with the physical world on the VisionOS platform.

- SwiftUI: Design the user interface and behavior for your VisionOS app.

- ARKit: A framework that integrates hardware sensor features to create augmented reality apps and games. ARKit combines device motion tracking, world tracking, scene understanding, and rendering utilities to simplify the creation of AR experiences.

Learn SwiftUI:

- What is SwiftUI: SwiftUI is a UI framework used to build user interfaces for Apple apps. SwiftUI also helps you create complex interfaces quickly and visually for VisionOS.

- Works with UIKit and AppKit: If you want to use an interface component that is not available in SwiftUI, you can combine SwiftUI with UIKit and AppKit to get the best of both worlds.

- Basic concepts: You need to master the basic concepts of SwiftUI such as View, State, Binding, and modifiers to be able to build application interfaces effectively.

- See more: https://developer.apple.com/xcode/swiftui/

Understanding Spatial Depth and Immersive Experiences:

- RealityKit: Provides high-performance 3D simulation and rendering that you can use to create VisionOS apps or build augmented reality (AR) apps for VisionOS, iOS, iPadOS, and macOS. RealityKit is an AR-first 3D framework that leverages ARKit to seamlessly integrate virtual objects into the real world.

- 3D Space: In VisionOS, you’ll work with 3D space. This requires understanding concepts like coordinates, viewing angles, and lighting.

- Depth: Creating a sense of depth is crucial in VisionOS apps. You can use visual and audio effects to enhance this feeling.

- Combining RealityKit and Metal: Use RealityKit and Metal to create complex visual effects and real-time interactions.

- Leveraging Vision Pro Features: Integrate features like Eye Tracking, Hand Tracking, and Spatial Audio to create unique experiences.

References:

- Official Apple Documentation: https://developer.apple.com/documentation/visionos

- Unity PolySpatial visionOS: https://docs.unity3d.com/Packages/com.unity.polyspatial.visionos@1.0/

- Unity Spatial: https://unity.com/campaign/spatial

- RealityKit: https://developer.apple.com/documentation/RealityKit

- SwiftUI: https://developer.apple.com/documentation/SwiftUI

- ARKit: https://developer.apple.com/documentation/arkit

Additional Considerations for VisionOS App Development

Beyond the foundational elements discussed earlier, here are some additional considerations to keep in mind when developing apps for Apple Vision Pro:

1. User Interface Design:

- Intuitive Interactions: Design interactions that are natural and intuitive for users, leveraging features like eye tracking and hand gestures.

- Clear Visuals: Ensure that UI elements are easily visible and understandable in the virtual environment.

- Spatial Awareness: Consider the spatial context of the app and design elements that fit seamlessly within the 3D space.

2. Performance Optimization:

- Efficient Rendering: Optimize your app’s rendering performance to avoid frame rate drops or lag.

- Resource Management: Manage memory and CPU usage carefully to ensure a smooth experience.

- Battery Efficiency: Consider battery consumption when developing resource-intensive features.

3. Accessibility:

Inclusive Design: Ensure your app is accessible to users with disabilities by providing features like voice control, closed captions, and high-contrast modes.

4. Testing and Debugging:

- Thorough Testing: Test your app on various hardware configurations and under different lighting conditions.

- Debugging Tools: Utilize Xcode’s debugging tools to identify and fix issues efficiently.

5. App Store Submission:

- Guidelines: Adhere to Apple’s App Store guidelines for VisionOS apps, including content restrictions and performance requirements.

- Metadata: Provide accurate and informative metadata, including app description, screenshots, and keywords.

6. Continuous Updates:

Iterative Development: Stay updated with VisionOS advancements and consider implementing new features or improvements in future app updates.

7. User Feedback:

Gather Insights: Collect user feedback through ratings, reviews, and analytics to identify areas for improvement.

8. Explore Niche Opportunities:

Unique Experiences: Consider developing apps that leverage VisionOS’s unique capabilities, such as educational experiences, medical simulations, or creative tools.

9. Collaborate with Other Developers:

Community Engagement: Connect with other VisionOS developers to share knowledge, collaborate on projects, and stay informed about industry trends.

10. Stay Informed:

- Apple Developer Documentation: Regularly review Apple’s developer documentation for VisionOS to stay updated on new features, APIs, and best practices.

- Industry News: Follow industry news and blogs to stay informed about emerging trends and technologies.

By carefully considering these factors, you can develop high-quality and engaging VisionOS apps that provide exceptional user experiences and contribute to the growth of the AR/VR ecosystem.

Conclusion

Developing apps for Apple Vision Pro is a fantastic opportunity to create innovative and unique products on one of the most advanced XR hardware devices available today. With the basic knowledge and resources I’ve shared, you can confidently embark on your journey of exploring VisionOS app development.

XRTechLab believes that XR (VR/AR/MR) is a promising field with significant growth potential. We aim to partner with businesses and developers to create innovative and useful XR applications, providing users with the best possible experiences. If you have any questions or inquiries related to XR in general or developing apps for Apple Vision Pro specifically, feel free to leave a comment below or email us.